Research

Detecting Insincere Questions from Text: A Transfer Learning Approach

The internet today has become an unrivalled source of information where people converse on content based websites such as Quora, Reddit, StackOverflow and Twitter asking doubts and sharing knowledge with the world. A major arising problem with such websites is the proliferation of toxic comments or instances of insincerity wherein the users instead of maintaining a sincere motive indulge in spreading toxic and divisive content. The....

Paper View on Github

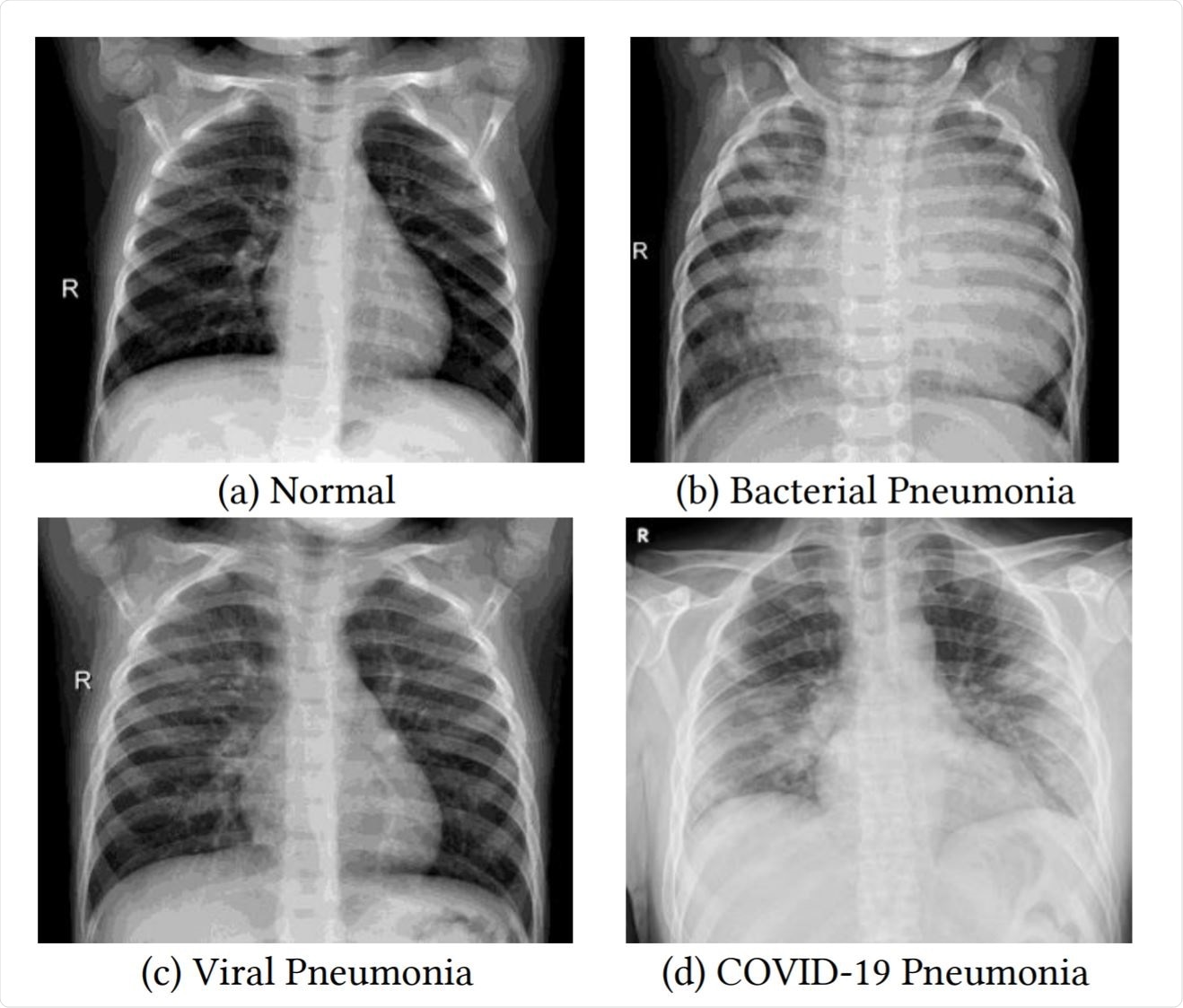

Covid 19 Chest Xray Detection : A Transfer Learning Approach

The coronavirus outbreak scaused a devastating effect on people all around the world and has infected millions. The exponential escalation of the spread of the disease makes it emergent for appropriate screening methods to detect the disease and take steps in mitigating it. In this study models are developed to provide accurate diagnostics for multiclass classification (Covid vs No Findings vs Pneumonia).

Paper View on GithubProjects

Automatic Text Summarization with Pytorch and Transformers

Fine tuned a T5ForConditionalGeneration (t5-base) Model on a custom News Summary dataset to perform Abstractive Text Summarization. Created a custom dataloader from data comprising of a news core text and its human generated summary. Kept a constant mini-batch size of 2 and trained the model for 3 epochs. The gradients were calculated using the AdamW optimizer. The training was done using Pytorch Lightning library.

View on Github

Roberta for Covid-19 Tweet Sentiment Classification

Widening the perspective on the state of the global pandemic by harnessing the power of Twitter data and the powerful Roberta model by Facebook. Custom cleaned tweets were fed to the Roberta tokenizer to tokenize according to the model's configuration and then a custom RobertaModel was concatenated with two linear layers to perform sentiment analysis on the tweet data.

View on Github View on Kaggle

Generating Text with BART

Teaching the denoising autoencoder - BART to generate given text with Pytorch Lightning.

View on Github

LGBM Classifier + Optuna for Tabular data classification

Optuna is an automatic hyperparameter optimization software framework, particularly designed for machine learning. It features an imperative, define-by-run style user API. In this project I used the new integration API of optuna to automatically tune LGBM parameters for tabular data classification.

View on Github View on Kaggle

EfficientNetb4 for Leaf disease classification

At least 80% of household farms in Sub-Saharan Africa are affected by viral diseases and are major sources of poor yields. As part of this Kaggle Competition I fine tuned an EfficientNetB4 model using fastai as well as keras to classify images comprising of four classes of diseased leaf variants and a normal class.

View on Github View on KaggleEnsemble Regression for Tabular data.

An advanced ensemble regression of Xgboost regressor + lightgbm regressor + neural network. Initially the predictions were made using xgboost and lgbm seperately, then these predictions were averaged and fed to a neural network to make the final predictions. This approach put me in top 10% in the competition - Tabular Playground series - Jan 2021.

View on Github View on Kaggle

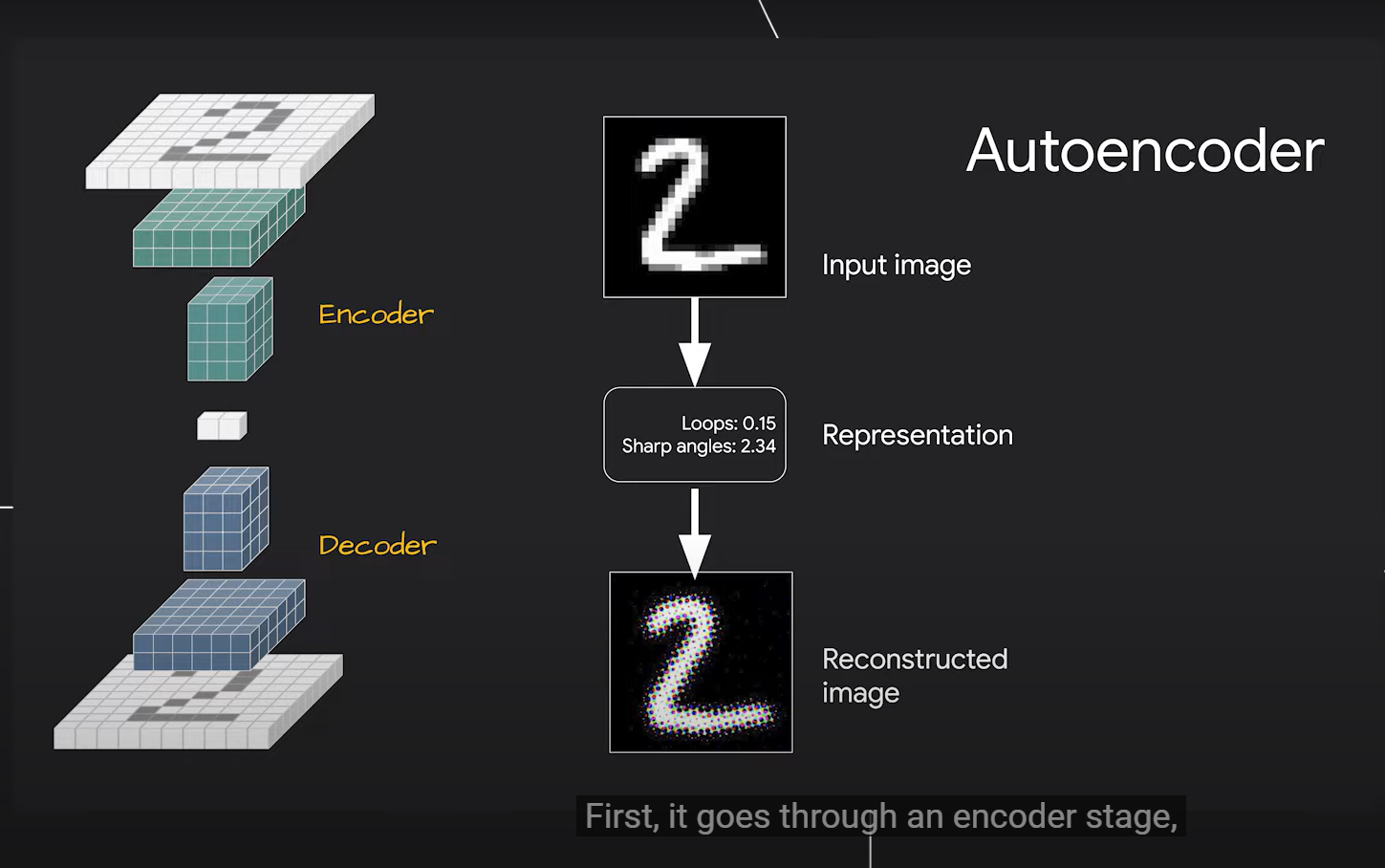

Generating Digits with Variational AutoEncoder

Generating Digits using Variational AutoEncoders in Keras. Inspired by Francois Chollet's tutorial in his book - Deep Learning with Python.

View on Github

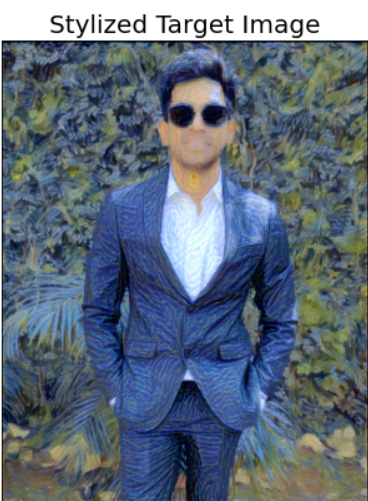

Neural Style Transfer

In this kernel I implemented the style transfer method that is outlined in the paper, Image Style Transfer Using Convolutional Neural Networks, by Gatys in PyTorch. In this paper, style transfer uses the features found in the 19-layer VGG Network, which is comprised of a series of convolutional and pooling layers, and a few fully-connected layers. In the image below, the convolutional layers are named by stack and their order in the stack.

View on Github

Text Regression using transformers and Pytorch

In this recently launched competition, we are supposed to build algorithms to rate the complexity of reading passages for grade 3-12 classroom use. I created a simple Roberta-large baseline using Root mean squared loss as its loss function to get a continuous output in native Pytorch.

View on Github

Text Classification with ULMFIT fastai.

ULMFit is an effective transfer learning method that can be applied to any task in NLP, and introduces techniques that are key for fine-tuning a language model. It matches the performance of training from scratch on 100x more data.

View on Kaggle